I am a Postdoctoral Machine Learning Researcher at the University of Edinburgh, working with Desmond Higham. My research focusses on making AI more reliable by understanding and exploiting geometric constraints that arise in neural network models.

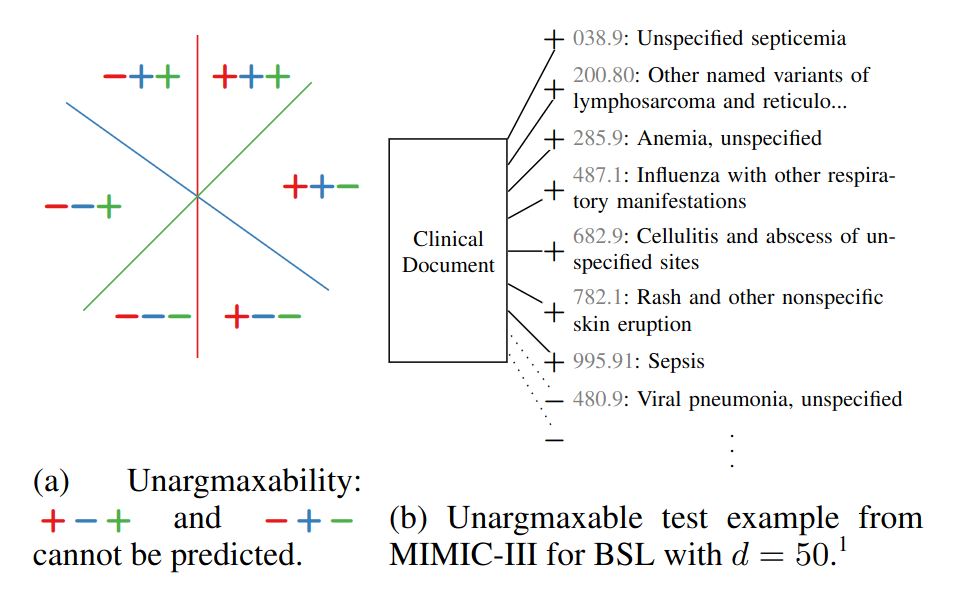

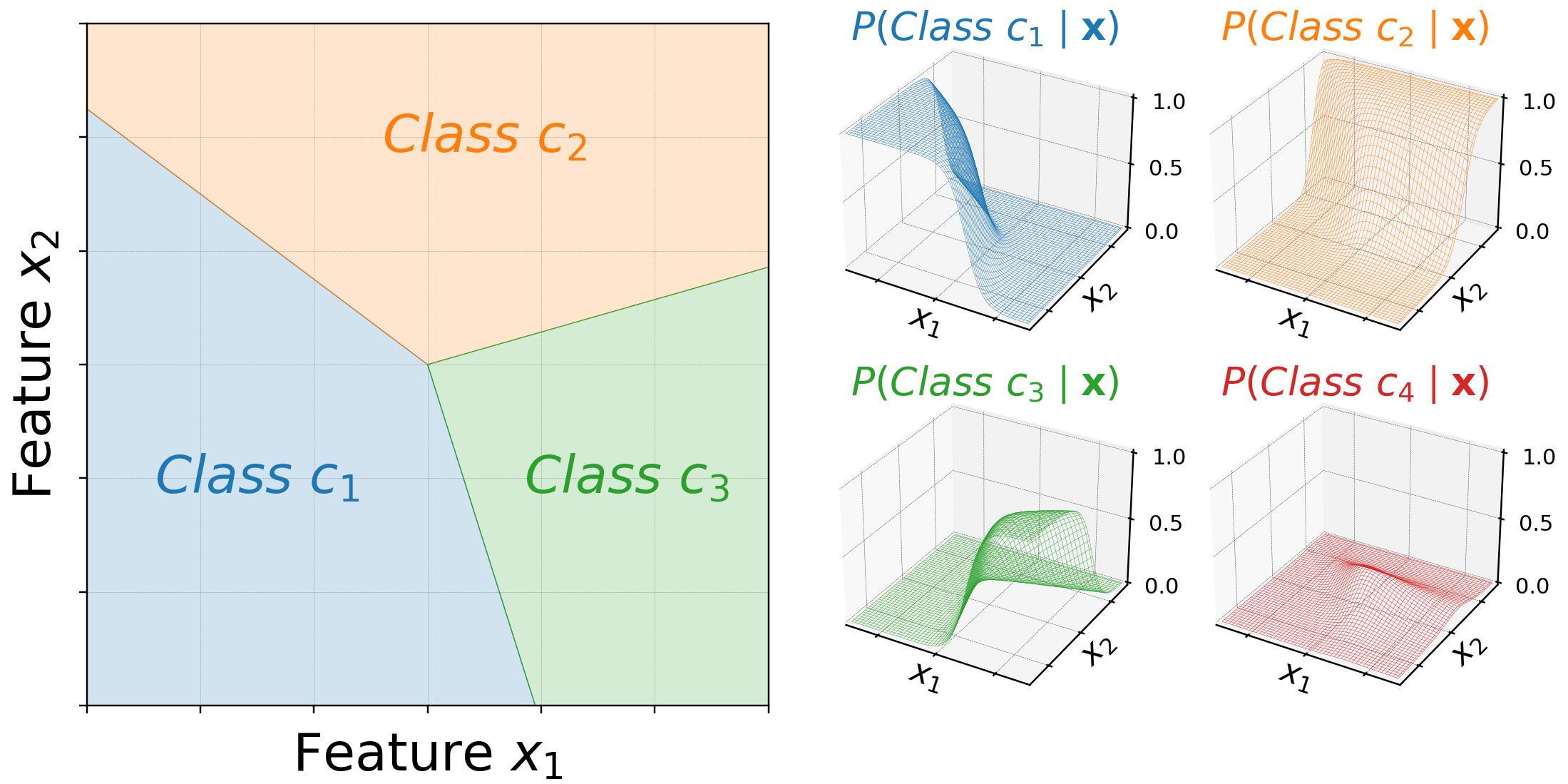

More specifically, I study the output layer of deep neural networks that have a large number of outputs, e.g. Large Language Models (LLMs). Any such output layer that has more outputs than inputs unavoidably has outputs that are impossible to predict. I call such outputs unargmaxable. My research identifies unargmaxable outputs in LLMs and Clinical NLP models and proposes replacement layers which guarantee that outputs of interest can be predicted.

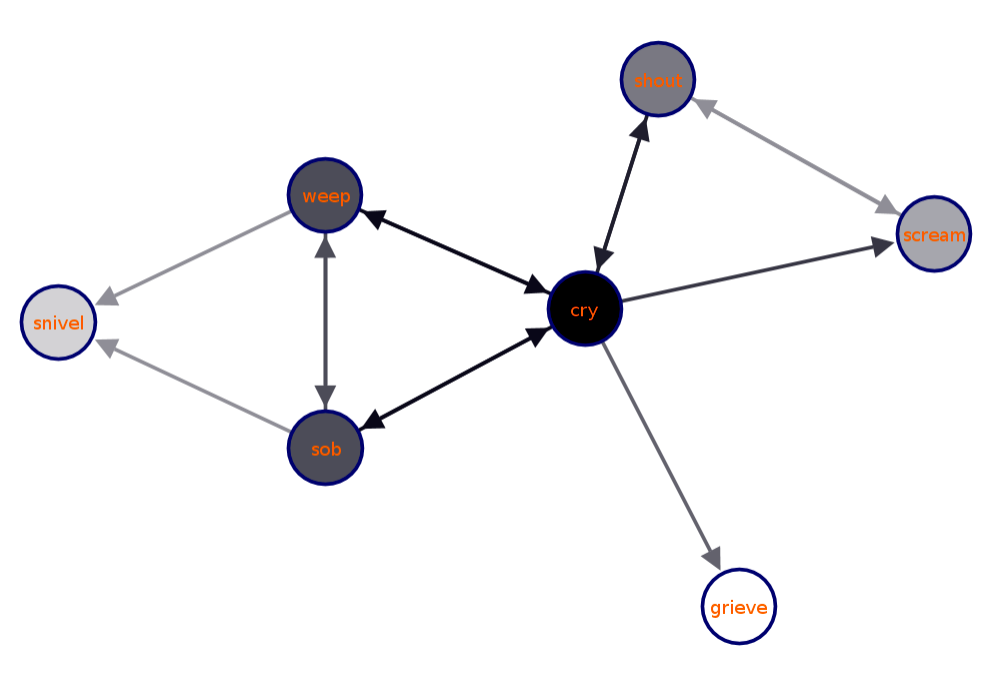

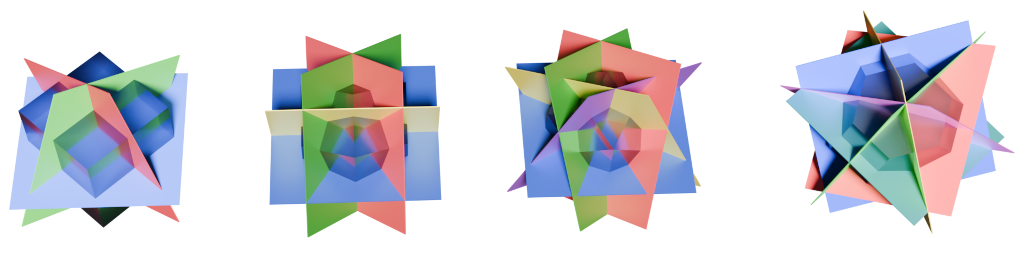

More generally, I have a soft spot for language and structure and I enjoy creating interactive visualisations of geometric representations I am learning about; here are some examples. I completed my PhD in 2024, supervised by Adam Lopez and Antonio Vergari. During my PhD, I was also a part-time Research Assistant on Information Extraction from news articles and clinical text under the supervision of Beatrice Alex.Selected publications

Taming the Sigmoid Bottleneck: Provably Argmaxable Sparse Multi-Label Classification. Andreas Grivas, Antonio Vergari and Adam Lopez. Accepted at AAAI 2024.

See also: , Poster, Interactive visualisation (i), Interactive visualisation (ii)

Low-Rank Softmax Can Have Unargmaxable Classes in Theory but Rarely in Practice. Andreas Grivas, Nikolay Bogoychev and Adam Lopez. ACL 2022 (Oral Presentation)

See also: , Poster, Interactive visualisation

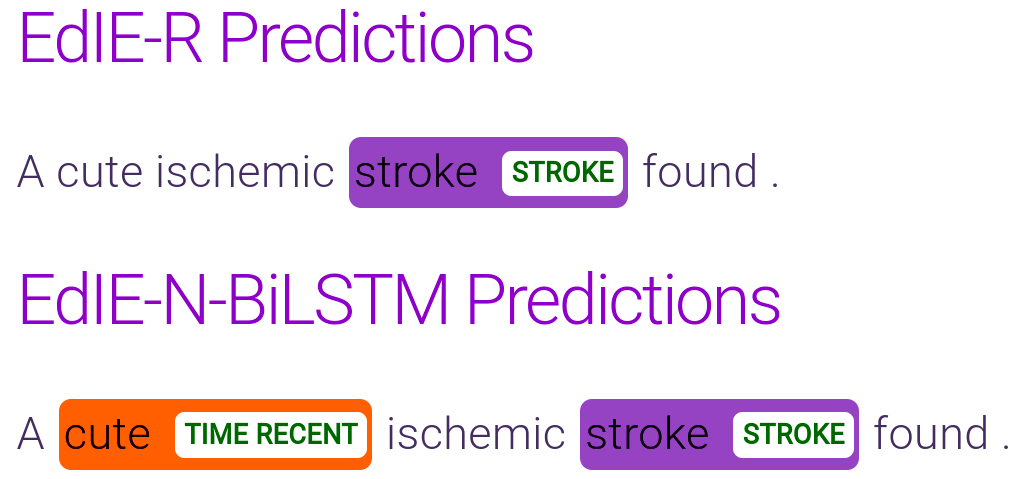

Not a cute stroke: Analysis of Rule-and Neural Network-based Information Extraction Systems for Brain Radiology Reports. Andreas Grivas, Beatrice Alex, Claire Grover, Richard Tobin and William Whiteley. LOUHI 2020

See also:

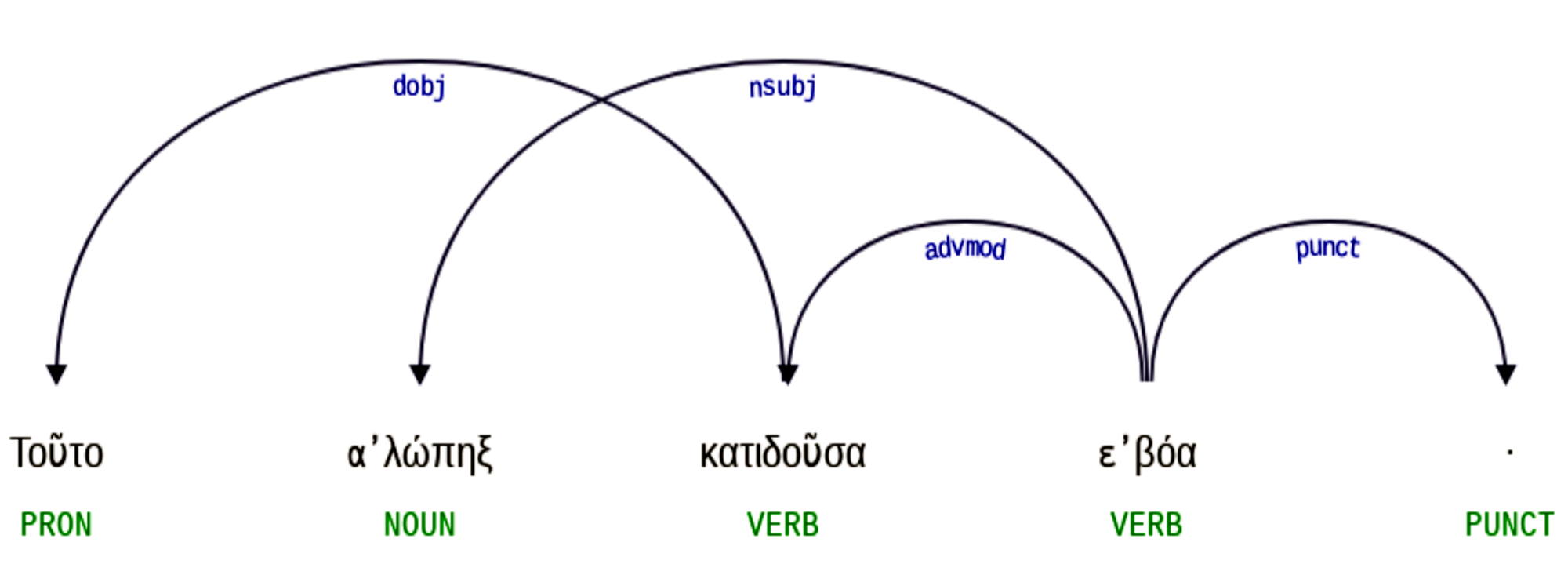

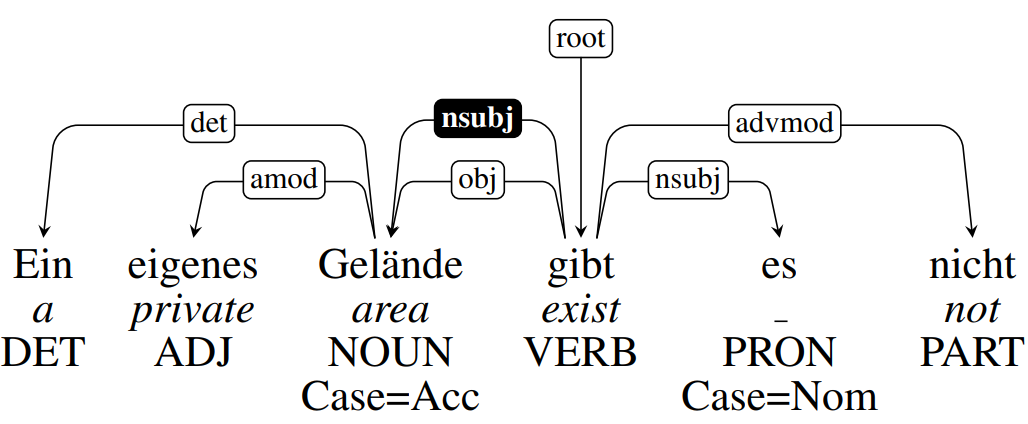

What do Character-level Models Learn About Morphology? The Case of Dependency Parsing. Clara Vania, Andreas Grivas, and Adam Lopez. EMNLP 2018

Dissertations

PhD Thesis, University of Edinburgh (2024)

- My vimrc and other dotfiles can be found here.

- My public key is 24A721BB42D9A790